Microsoft 365 Copilot success starts with smart data governance. Here’s why trust, compliance, and control come first. And how Microsoft Purview helps you get there.

Adopting Microsoft 365 Copilot – or any kind of enterprise AI – is built on big promises.

Better productivity, faster decisions, greater efficiency, smarter ways of working.

You know, all the cool stuff that saves the organisation time and money. That enables the innovation, intelligence, and agility required for commercial and operational gain.

What’s more, it can actually deliver all this.

But (there’s always a but, isn’t there?), behind every AI interaction is your data.

And if that data isn’t secure, well-governed, and compliant, the risks can easily outweigh the rewards.

Is your data ready for AI adoption?

Without a secure and compliant data foundation, enterprise AI cannot scale responsibly or sustainably.

Microsoft 365 Copilot brings generative AI into everyday productivity tools. It works by drawing on organisational data to generate emails, summarise documents, or suggest actions. But to do this safely and effectively, organisations need complete confidence in how their data is protected and governed.

Sensitive information, like financial data, intellectual property, and customer records, must be appropriately labelled, access-controlled, and monitored.

This dramatically raises the stakes for how data is governed.

How Microsoft Purview enables Copilot readiness

Adopting Microsoft 365 Copilot into your Microsoft estate is no different to any other major transformation project. It needs to be done strategically, methodically, and with care.

And the sanctity of your data needs to be at the core.

This is where Microsoft Purview can play a pivotal role.

As a native part of the Microsoft ecosystem, it’s built to seamlessly govern the same data, productivity tools, and cloud services that Copilot relies on.

This alignment means governance, protection, and compliance policies can be applied and enforced consistently – without additional complexity, third-party integrations, or duplicated effort.

For instance:

- It’s built natively into the Microsoft ecosystem which means no connectors or bolt-ons required.

- It provides shared labels, DLP, and policy frameworks across Microsoft 365, Azure, and Fabric.

- Consolidated licensing and management mean an overall lower total cost of ownership.

- It ensures that governance is entrenched throughout your entire strategy.

So, that’s the overview. But what does that look like in reality?

Free Guide

The Ultimate Guide to Microsoft Security

The most comprehensive guide to Microsoft Security. Over 50 pages. Microsoft licensing and pricing simplified.

Discover technologies that:

- Detect and disrupt advanced attacks at machine-speed

- Tap into the world’s largest threat intelligence network

- Protect identities, devices, and data with ease

Addressing common data security challenges in AI Adoption

So, let’s look at some of the challenges organisations can face when preparing for Microsoft 365 Copilot’s introduction. And more importantly, how tools like Purview can offer the grounding for its secure, scalable introduction.

How to monitor, manage, and protect your sensitive data

Tools like Copilot rely on access to organisational data across Microsoft 365. Without visibility into what that data includes, and how sensitive it is, there’s a real risk of exposing confidential or regulated content.

How Microsoft Purview helps:

- Automated scanning of Microsoft 365 workloads (including SharePoint, OneDrive, Teams and Exchange) to identify and catalogue data at scale.

- Built-in and custom classifiers that detect and categorise sensitive information, such as personal data, financial records, IP, and commercial-in-confidence content.

- Sensitivity labels that can be applied automatically based on classification results, helping ensure consistent data handling.

Microsoft Purview offers AI-powered discovery and labelling capabilities that continuously update to support evolving data types and regulatory requirements. This allows you to understand exactly what data Copilot might interact with and, crucially, to take action before it happens.

The outcome

Sensitive data is proactively identified, clearly labelled, and protected by default. This reduces the risk of exposure or inappropriate use by Copilot or any other AI tools.

Managing user access and stopping AI overreach

For Microsoft 365 Copilot to be effective, it needs access to relevant data, but it shouldn’t have access to everything.

Controlling access and enforcing appropriate handling is essential for avoiding both internal and regulatory risks.

How Microsoft Purview helps:

- Data Loss Prevention (DLP) policies that monitor and restrict sharing of sensitive information – whether within Microsoft 365 or in AI-generated content.

- Label-based protection rules that automatically enforce encryption, usage restrictions and expiry controls, even as content moves or is reshared.

Purview makes it possible to align AI access with existing data protection policies. Labels are persistent and follow the data, ensuring consistent protection even as content flows across teams, devices or platforms.

It’s worth noting here that, aligned to Microsoft Purview’s tools, SharePoint permissions also play a critical role in shaping what Copilot can access. Copilot uses Microsoft Graph to determine content availability, which is governed in part by how SharePoint sites are managed:

- SharePoint admins and site owners can reduce oversharing by applying sharing policies and running access reviews.

- SharePoint Advanced Management helps surface potentially overexposed content and clean up outdated or inactive sites.

- Site-level controls, such as Restricted Access and Restricted Content Discovery policies, allow admins to fine-tune what Copilot can surface to specific user groups or exclude from Copilot entirely.

Getting these controls right helps ensure Copilot responses are based on current, relevant, and appropriately scoped data.

The outcome:

Microsoft 365 Copilot’s data access stays tightly aligned with user permissions. Sensitive content is handled appropriately, and exposure risk is significantly reduced, without limiting business productivity.

AI usage and compliance challenges

Deploying Microsoft 365 Copilot doesn’t end with initial configuration.

Organisations need visibility into how their AI tools are being used. They need to know, at all times, where risks are emerging and whether policies are being followed.

How Microsoft Purview helps:

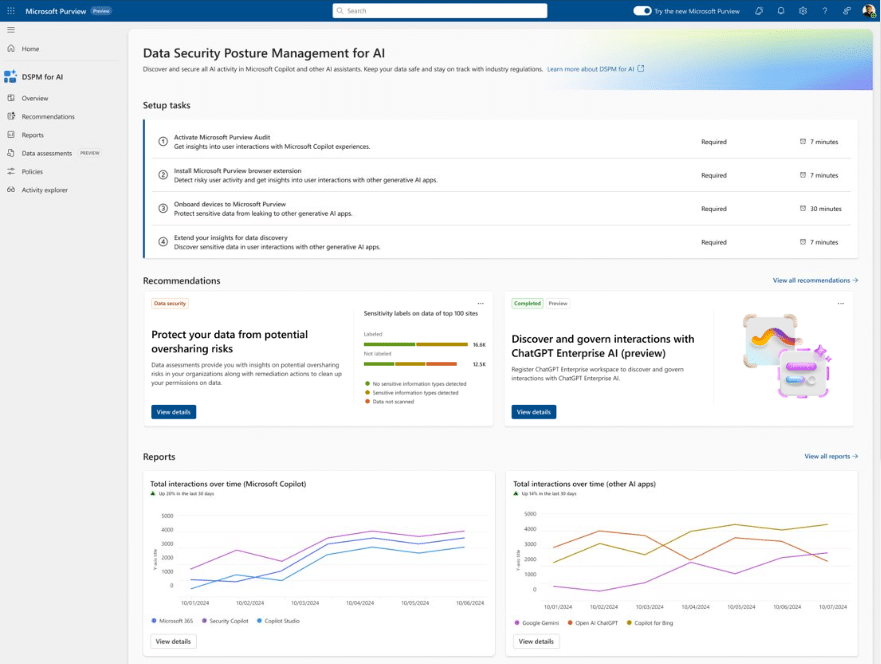

- Data Security Posture Management for AI (DSPM for AI) provides a centralised view of AI-related data risks. This shows where sensitive data is accessed, by whom, and how it’s being used.

- Usage analytics and activity monitoring reveal trends, detect anomalies, and surface potential compliance issues before they escalate.

- Policy templates and risk recommendations help configure guardrails for how AI prompts and outputs handle sensitive information, reducing oversharing risks.

- Enhanced DLP coverage, including endpoint and cloud-based controls, helps prevent sensitive data from being entered into third-party AI tools like ChatGPT.

Purview also includes real-time alerting and advanced audit capabilities, enabling security and compliance teams to investigate incidents quickly, generate audit trails, and demonstrate accountability.

The outcome:

You gain operational control over your AI environment, backed by the evidence you need to prove compliance, respond to regulators, and build trust internally.

Read more about Purview’s expanded AI compliance features:

-

A centralised command centre to oversee and manage how AI tools access and use data. It supports secure deployment of Microsoft 365 Copilot and other AI services by reducing risk exposure.

Key features:

- Centralised dashboard for visualising AI-related data risks across the organisation.

- Activity insights that highlight usage trends, anomalies, and compliance gaps.

- Ready-to-deploy policies to reduce oversharing of sensitive data in prompts and outputs.

- Ongoing risk assessments and remediation guidance to reduce exposure over time.

-

Endpoint DLP rules can be used to warn or block users from pasting or entering sensitive data into third-party AI tools, like ChatGPT, or sharing outputs externally across endpoints or cloud services.

Key features:

- Create targeted policies to prevent sharing of protected data with AI tools like Copilot and ChatGPT.

- Use endpoint DLP to detect and block sensitive information being pasted or typed into browser-based AI tools.

- Extend DLP coverage across Microsoft 365 apps, ensuring consistency and reducing policy blind spots.

-

Real-time alerts and dashboards help track data access, policy violations, and usage trends. This includes deeper visibility into how Copilot and other AI tools access and interact with sensitive or protected data.

Key features:

- Full visibility into user and AI interactions with sensitive content.

- Alerts for suspicious behaviour.

- Detailed audit trails to support forensic investigations, compliance checks, and incident response.

-

These tools ensure that AI-generated and human communication stays within policy boundaries, supporting internal governance and legal readiness.

Key features:

- Monitor collaboration tools (e.g. Teams, Outlook) for risky or non-compliant messages.

- Investigate flagged content to determine intent, origin, and compliance impact.

- Streamline discovery workflows with powerful content search, tagging, and case management tools.

How to enable consistent AI governance across your entire Microsoft ecosystem

Microsoft 365 Copilot doesn’t live in a single app. It’s embedded across your entire Microsoft estate. Governance, therefore, needs to be consistent and scalable across your broader environment.

How Microsoft Purview helps:

- Deep integration across Microsoft 365 apps, including Teams, Word, Excel, or Outlook, ensuring unified governance where Copilot is most active.

- Seamless connection with Microsoft Defender for Cloud Apps and Microsoft Sentinel, enabling monitoring, threat detection and policy enforcement across data flows.

- Support for Microsoft Fabric, Azure Synapse and Azure AI, extending data governance and protection into analytics platforms and AI model inputs and outputs.

By embedding Purview into this broader ecosystem, organisations gain end-to-end visibility and policy control, from data creation to AI-generated content and decision-making logic.

The outcome:

You achieve consistent governance wherever Copilot interacts with data. Security, compliance and visibility are unified, giving you a future-proof foundation for AI at scale.

How compliance can enable AI innovation

Data protection and compliance shouldn’t be viewed as a blocker to innovation.

When done right, they:

- Accelerate Microsoft 365 Copilot rollouts by building stakeholder confidence

- Reduce risk while enabling broader access to high-value data

- Provide a clear framework for scaling AI across more teams and use cases

The goal isn’t just to “tick the box” on regulations like GDPR or industry-specific standards. It’s to embed compliance into the fabric of AI initiatives, so innovation can scale safely and sustainably.

It’s all about putting the safety measures in place from the outset to ensure you can expand and innovate with confidence and without hindrance.

Final thoughts

It’s easy to see why we’re now witnessing the widescale adoption of Microsoft 365 Copilot and AI in general. The possibilities and potential gains are certainly compelling and hard to ignore. As is the fear that you might fall behind the competition if you don’t go all in on AI.

So we get that it’s tempting to simply switch on these capabilities and surge ahead. And we’re not suggesting for a moment that you dampen the enthusiasm. But let’s get the planning in place first.

Microsoft 365 Copilot interacts with vast amounts of organisational data, and without the right safeguards, the risks to privacy, security, and compliance can quickly spiral.

Getting this right doesn’t mean slowing innovation. It means creating the conditions where innovation can thrive confidently and securely. Strong governance, data protection, and oversight provide the fundamental building blocks for success in the AI sphere.

Microsoft Purview provides the frameworks and tools that make this possible. With the right foundations in place, your organisation can embrace Copilot’s power. Knowing that your data, your people, and your reputation are protected every step of the way.

Key takeaways

Copilot success relies on trusted, well-managed organisational data.

Lack of governance increases the risk of data leaks and compliance breaches.

Microsoft Purview helps identify, classify and label sensitive information.

Access policies ensure Copilot only interacts with authorised data.

AI-generated content must be protected, monitored and controlled.

Audit trails and reporting are essential for demonstrating compliance.

Integrated governance across Microsoft tools ensures consistent protection.

Robust data security enables faster, safer enterprise AI adoption.

Need some advice for your Microsoft 365 Copilot adoption? Get in touch and speak to our team.

Free Guide

The Ultimate Guide to Microsoft Security

The most comprehensive guide to Microsoft Security. Over 50 pages. Microsoft licensing and pricing simplified.

Discover technologies that:

- Detect and disrupt advanced attacks at machine-speed

- Tap into the world’s largest threat intelligence network

- Protect identities, devices, and data with ease

Next steps

If you liked this, please share on your social channels.

Great emails start here

Sign up for free resources and exclusive invites

Subscribe to the Kocho mailing list if you want:

- Demos of the latest Microsoft tech

- Invites to exclusive events and webinars

- Resources that make your job easier

Got a question? Need more information?

Our expert team is here to help.